When we move our workloads to the cloud. it is not about spinning up servers or deploying apps.it is about building something that lasts forever. Something that’s secure, efficient and ready to scale as your business grows. That’s where the AWS Well-Architected Framework comes in.

It helps cloud architects, developers and devops teams make better decisions while designing systems that are resilient, secure and optimized for performance and cost.

What Is the AWS Well-Architected Framework?

AWS Well-Architected Framework is a collection of key concepts, design principles and best practices for designing and running workloads in the cloud.

The Six Pillars of AWS Well-Architected Framework

The framework is built around six core pillars

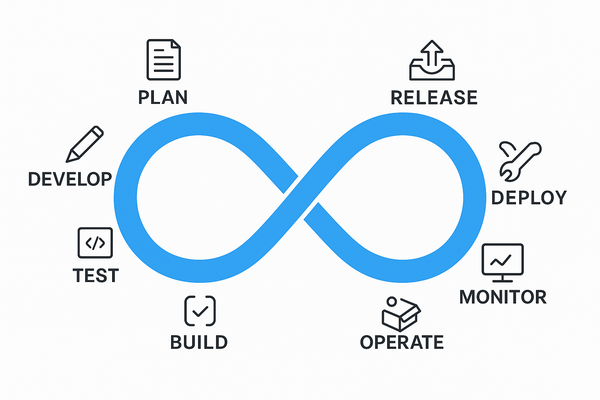

1. Operational Excellence

Goal: Run and monitor systems effectively to deliver business value and continuously improve.

This pillar focuses on automation, monitoring and incident response.

You learn to document everything, evolve your procedures, and design systems that can be easily operated.

Key takeaway: Build operations as code. Automate repetitive tasks and always keep improving.

2. Security

Goal: Protect data, systems and assets using cloud-native security practices.

AWS encourages a defense-in-depth approach—secure every layer from identity and access to data encryption.

Key takeaway: Security is everyone’s responsibility. Protect, detect and respond continuously.

3. Reliability

Goal: Ensure your workload performs correctly and consistently even when things go wrong.

It’s all about resiliency, fault tolerance and disaster recovery. Design for failure because in the cloud, it’s inevitable, but manageable.

Key takeaway: Don’t hope systems won’t fail design them to recover when they do.

4. Performance Efficiency

Goal: Use computing resources efficiently to meet system requirements and maintain performance as demand changes.

This means choosing the right instance types, storage options and database solutions to optimize speed and scalability.

Key takeaway: Continuously review and evolve your architecture as technology evolves.

5. Cost Optimization

Goal: Avoid unnecessary costs and maximize the business value from every dollar spent.

AWS gives you visibility and tools like Cost Explorer and Budgets to monitor and control spending.

Key takeaway: Pay only for what you use—and always look for smarter ways to save.

6. Sustainability

Goal: Minimize the environmental impact of your cloud workloads.

This newer pillar focuses on using resources responsibly, choosing energy efficient regions and optimizing workloads to reduce carbon footprint.

Key takeaway: Build green architectures that are efficient and sustainable for the planet.

Why It Matters

Applying the AWS Well-Architected Framework ensures your systems are resilient, cost-effective, and future-ready.

Whether you’re a startup building your first cloud app or an enterprise migrating legacy workloads, this framework acts as your trusted compass in the cloud journey.

By regularly reviewing your workloads against the six pillars, you’ll not only identify risks early but also make informed improvements that drive long-term success.

Final Thoughts

Cloud architecture isn’t just about deploying resources—it’s about building smart, secure, and sustainable systems.

The AWS Well-Architected Framework provides the guidance to help you do exactly that balancing performance, cost, and reliability while keeping security and sustainability at the heart of it all.

So the next time you design or review a workload, remember these six pillars — they’re not just best practices, they’re the foundation of every great cloud architecture

What’s Next?

The journey is ongoing. I’m glad to have you along for the ride.

- Follow our tutorials

- Explore more Dev+Ops engineer career guides

- Subscribe to InsightClouds for weekly updates

Devops tutorial :https://www.youtube.com/embed/6pdCcXEh-kw?si=c-aaCzvTeD2mH3Gv