Every time you open a website, send an email or use an app, DNS is working silently in the background.

However, most people use the internet daily without understanding this critical system.

In this blog series, we will understand DNS (Domain Name System) from scratch, step by step, without assuming any prior networking knowledge.

This is Part 1, where we will focus on:

- What DNS really is

- Why DNS was created

- How humans and computers communicate on the internet

- A simple, real-world explanation of DNS

By the end of this part you will clearly understand why DNS is the backbone of the internet.

What Is DNS?

DNS stands for Domain Name System.

In simple words:

DNS converts human-readable website names into computer-readable IP addresses.

Example:

google.com → 142.250.190.78

Humans remember names easily.

Computers communicate only using numbers.

DNS acts as a translator between humans and machines.

Why DNS Was Created

Let’s imagine the internet without DNS.

To open a website, you would need to remember IP addresses like:

142.250.190.78

151.101.1.69

104.244.42.1

Now imagine remembering hundreds of such numbers.

This is exactly why DNS was invented.

Before DNS

- Computers talked using IP addresses only

- Humans had to remember numbers

- The internet was hard to use

After DNS

- Humans use names (google.com)

- Computers still use IP addresses

- DNS connects both worlds seamlessly

DNS made the internet usable, scalable and user-friendly.

What Is an IP Address?

An IP address is a unique numerical identifier given to every device connected to the internet.

Example:

IPv4: 192.168.1.1

IPv6: 2001:db8::1

Think of an IP address like:

- A house address

- A phone number

- A unique location identifier

Without IP addresses, computers wouldn’t know where to send data.

The Core Problem DNS Solves

Let’s simplify the problem:

- Humans want to use names

- Computers require numbers

- The internet needs a mapping system

That mapping system is DNS.

| Human Uses | Computer Uses |

|---|---|

| google.com | 142.250.190.78 |

| amazon.in | 176.32.103.205 |

| insightclouds.in | Server IP |

DNS stores and manages this mapping globally.

DNS in One Simple Analogy

Think of DNS as a phone contact list.

| Phone Concept | DNS Concept |

|---|---|

| Contact Name | Domain Name |

| Phone Number | IP Address |

| Phonebook | DNS Server |

When you click a contact name:

- Your phone finds the number

- Then places the call

When you type a website:

- DNS finds the IP

- Then your browser connects

How DNS Works (High-Level Overview)

At a very high level, DNS works like this:

- You type a website name in your browser

- Your system asks DNS:

“What is the IP address of this domain?” - DNS responds with the correct IP

- Your browser connects to that IP

- The website loads

At this stage, you don’t need to know the internal complexity.

That will be covered in Part 2.

For now, remember this:

DNS does not load websites. DNS only finds where websites live.

Is DNS a Server or a Service?

DNS is not just one server.

DNS is:

- A global distributed system

- Spread across thousands of servers

- Managed by multiple organizations

No single company owns DNS completely.

This design ensures:

- High availability

- Fault tolerance

- Global reliability

Even if some DNS servers fail the internet continues to work.

Why DNS Is Critical for the Internet

Without DNS:

- Websites wouldn’t open

- Emails wouldn’t work

- APIs would fail

- Cloud services would break

DNS impacts:

- Website availability

- Application performance

- Email delivery

- Security

- SEO

That’s why DNS is considered internet infrastructure, not just a feature.

Common Misconceptions About DNS

DNS hosts websites

Wrong.

DNS only points to servers. Hosting happens elsewhere.

DNS is optional

Wrong.

Without DNS, the internet is practically unusable.

DNS is simple

Wrong.

DNS looks simple but has deep architecture and security layers.

Who Should Understand DNS?

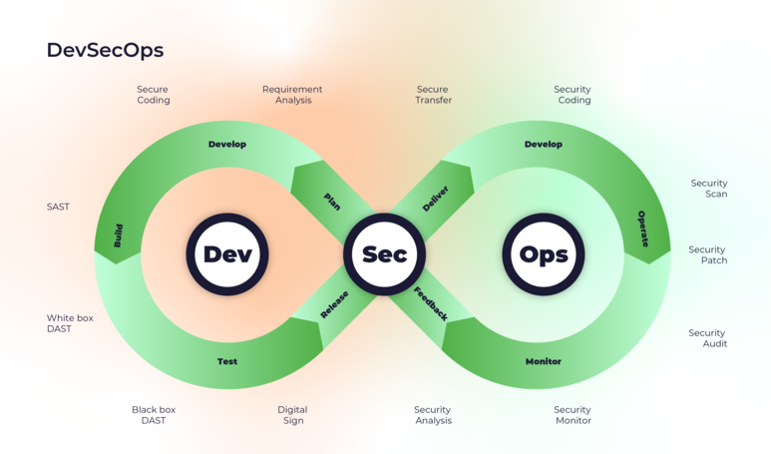

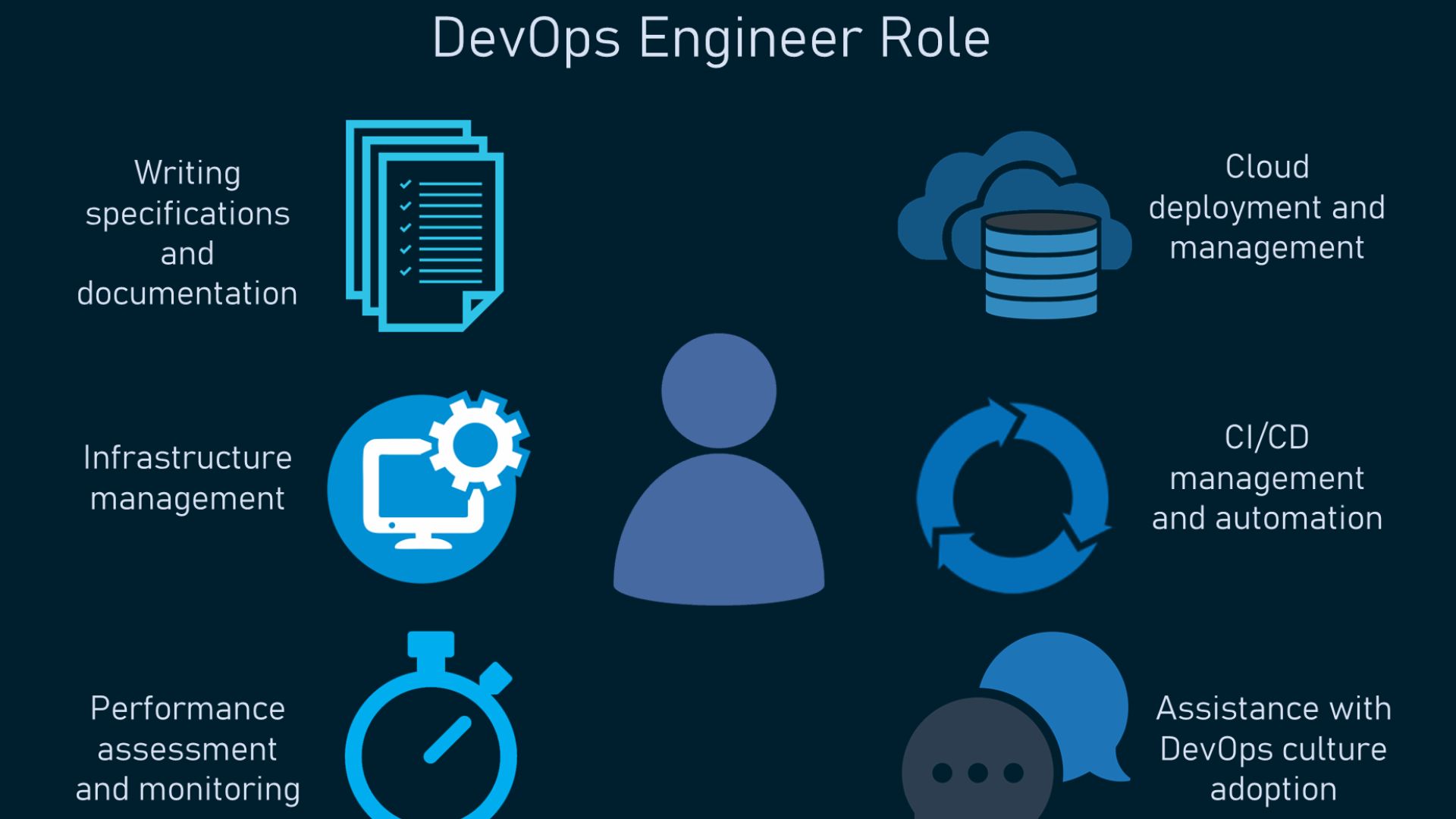

DNS knowledge is essential for:

- Developers

- DevOps engineers

- Cloud engineers

- SREs

- System administrators

- Website owners

- Bloggers and founders

Even basic DNS understanding prevents:

- Website downtime

- Email failures

- Misconfigurations

- Security risks

Summary of Part 1

In this first part, you learned:

- What DNS is

- Why DNS exists

- What IP addresses are

- How DNS helps humans and computers communicate

- Why DNS is a core part of internet infrastructure

You now understand what DNS does, without any complexity.

What’s Coming in Part 2

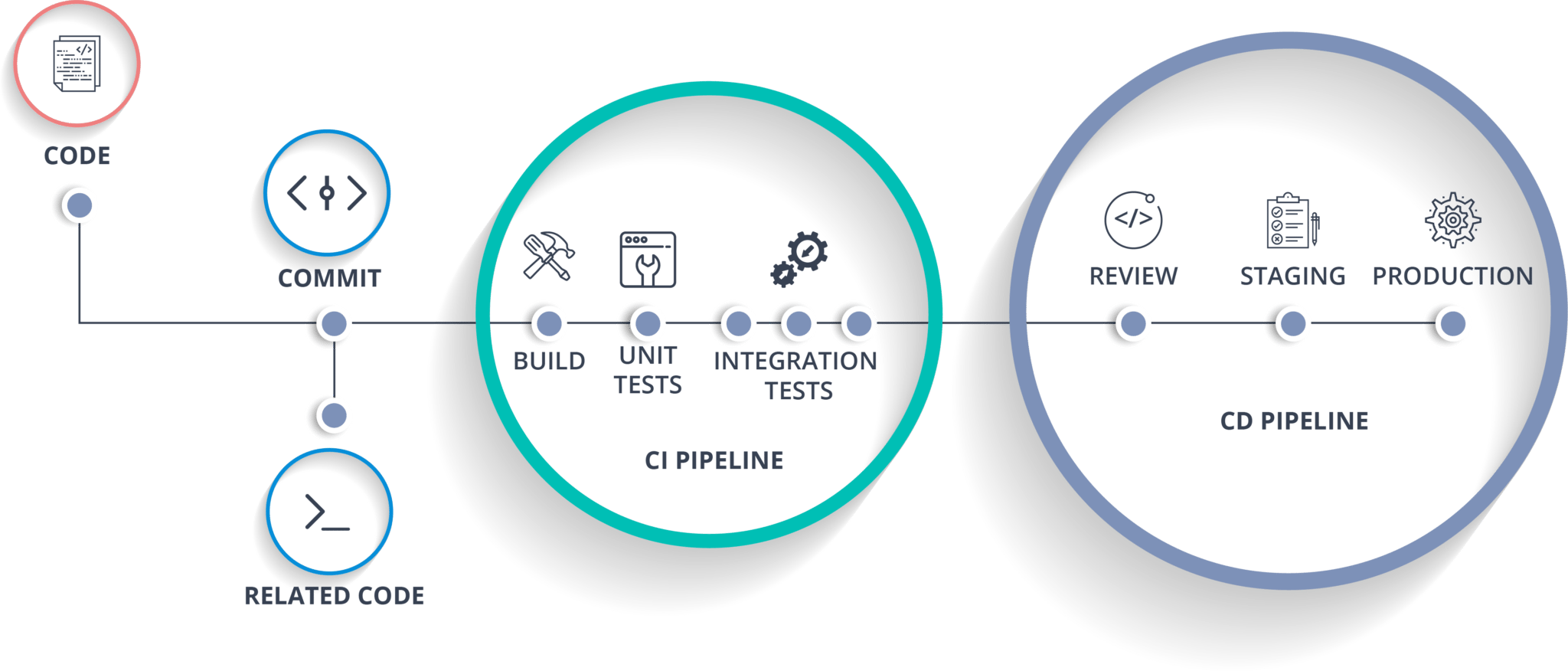

In Part 2, we will go deeper and explain:

- How DNS actually works step by step

- DNS hierarchy (Root, TLD, Authoritative servers)

- What happens when you type a domain in a browser

- DNS caching and TTL

Next Steps :

Devops tutorial :https://www.youtube.com/embed/6pdCcXEh-kw?si=c-aaCzvTeD2mH3Gv

Follow our DevOps tutorials

Explore more DevOps engineer career guides

Subscribe to InsightClouds for weekly updates